January 13, 2017 / Vito Gulla / 0 Comments

An introduction is the first paragraph (sometimes, in longer essays, first paragraphs) and serves, rather obviously, to introduce your topic. It here that you provide some kind of background or general information about your subject. For example, if you’re arguing for greater regulations on the financial industry, your introduction should give a brief history of previous regulations or the lack thereof. If you are writing criticism about Shakespeare’s The Tempest, you may want to begin by providing an overview of the more important or more recognized critical interpretations of the text which came before yours. However, that history does not always have to be so broad. While you may sometimes write about the larger ongoing discourse, you may also want to discuss your own personal history with the topic, using an anecdote to introduce it. If you’re arguing that eating meat is immoral for a philosophy essay, you may want to consider starting with a brief story about how you went from an omnivore to an herbivore. Of course, regardless of the approach you take, your introduction should always lead logically to your thesis.

The thesis statement is the heart of your essay and should, the majority of the time, come at the very end of your introduction. Typically, your thesis is a one sentence answer to an explicitly or implicitly asked question. For example, if your instructor asks you to write an essay about the influences on Arthur Penn’s Bonnie and Clyde, they are essentially asking, “What cause or causes produced Bonnie and Clyde?” Your thesis statement should directly answer that question. For example, you may answer it by writing, “Bonnie and Clyde was a result of the New Hollywood era, incorporating the vernacular of the French New Wave and promoting the sexual mores and anti-establishment nature of the hippie movement.” Notice this answer is not definitive. In order for a thesis to be valid, it must be debatable. It is not a fact: It is the author’s opinion backed up by evidence. In other words, your thesis should serve as the roapmap to your paper. It should not only provide your argument but your support as well. In the previous example, the reader has made the following promise: He or she will show how Bonnie and Clyde was influenced by the New Hollywood era, the French New Wave, and the hippie movement. Also, the author has implicitly signaled to the reader that the body paragraphs will follow that order.

- How to Construct a Thesis: A Formula

Whenever you write a thesis, you have three possible answers, “Yes, no, and maybe.” The first one is “yes, I agree with this idea.” The second one is “no, I disagree with this idea.” The third one is “this idea is both true and not true.” This should be the base of your thesis statement.

The second part of your thesis should list the evidence or support you plan to explore in the body of your essay. Therefore, you can use the following approach:

Thesis Statement = Your Argument + Point 1/Body Paragraph 1/Section 1, Point 2/Body Paragraph 2/Section 2, Point 3/Body Paragraph 3/Section 3, ad infinitum

-

The body paragraphs serve to prove the initial claim you have presented in your thesis. If, for example, you encounter the following a thesis, stating, “Though it is to difficult to decide the success or failure of a series of wars which took place in a nearly two-hundred year period, an investigation of the Crusades’s moral consequences yields a reasonably assertive answer: the Crusades failed because they were presented as just wars but, instead, led to institutionalized xenophobia and genocide, which continue to plague the west to this day,” you expect the first body paragraph/section to deal with how the wars were presented by church leadership, the second paragraph/section should deal with what the wars did, and the final paragraph/section should deal with the Crusades lingering effects. Here, you can again follow a simple formula to construct your paragraph.

Your first sentence, or topic sentence, should disclose to your reader how this idea proves your argument. Let’s take an example from the second paragraph: “In many respects, the First Crusade sprang out of the racial and religious fears of Christian Europeans rather than a war of revenge righting unforgivable wrongs.” This is a claim. Notice it is be an idea which is debatable (and not a fact).

The sentences which follow should provide “proof” for your claim. This is your evidence. It should be specific a fact or facts that you interpret to support your argument. You could use quotes, paraphrase, or summary from a text. In the previous case, we find, “As stated previously, Urban suggests that Muslim rule in Jerusalem has led to the most disturbing abuses against Christians; however, his description owes more to bigotry than it does to fact. As Marcus Bull observes: ‘Urban had almost certainly never been to the Holy Land himself, and what he said owed more to rhetoric than reality…. their treatment seldom, if ever, amounted to the sort of horror stories which Urban recounted. (11)’” Here the writer gives specific information to support his claim.

Following the evidence you present, you must explain its significance and how it connects to your overall point (your thesis). This is a warrant. In the Crusades paper, we expect to see a follow-up sentence, which states, “Therefore, Urban’s speech may not have been inspired by first-hand knowledge—or even second-hand knowledge—and it is fair to assume he was purposely preying on the ignorance of his audience.”

This is the typical process of laying out an argument. Depending on the length of the essay, this paragraph may end here or, if the writer feels as though he or she needs further evidence to support his or her case, the writer may continue to follow this process of evidence and warrants until the claim of his or her paragraph has been sufficiently “proved.”

The conclusion is often the most neglected part of academic form. Most writers tend to treat it as a simple restatement of the ideas presented in the introduction. If your reader wants to know what you’ve written in your introduction, they can reread your introduction. Think of it as a bigger version of what you do in your body paragraphs. The claim is your introduction, the evidence is your body, and the conclusion is your warrant. A conclusion should aim to answer the question, “So what?” Now that your reader has examined your claim and evidence, what can they do with that information?

October 1, 2015 / Vito Gulla / 0 Comments

A recent post here has garnered some attention from the internet, in particular, poet Joey De Jesus. He sent me a few messages on Twitter, reposted my article, and surprisingly, the two of us had a spirited–but in the end–friendly debate. We still disagree vehemently, but it might have been actually productive, which is odd because it took place on the internet. I would like to thank Joey for tweeting the article and posting it on Facebook, since after all, my goal is to get people talking about criticism, even if some of the conversation has reduced my argument to “white tears,” which, to my knowledge of the phrase, is an incorrect application. I don’t think it’s necessary to take others to task by name for their simplified engagement, as such arguments don’t actually state ideas or even offer criticism but instead, rely on the use of rhetorical devil-terms, designed to shut down argument rather than encourage it. With that said, however, I do want to get at the heart of what I began with “Kenneth Goldsmith and the Writ of Habeas Corpus”: Modern mainstream criticism has lost its way. Therefore, to Joey, because I hope you’re reading this, thanks.

So let me begin by saying I think I might take for granted the education I had as an undergrad. Many of today’s critics, who, as I’ve said before, are pretty highly educated, went to far more prestigous schools than me. But the thing I failed to recognize is that maybe those educations aren’t necessarily on the same page as mine. I don’t know to what extent many of today’s writers and poets learn about literary theory and the philosophical underpinnings behind a broad spectrum of critical approaches. It really depends on the program, and mine was very theory-based. (This is not to imply that your program was bad, dear reader, nor am I trying to talk down to those of you who bristle at the thought of theory. Presumably, we, and our respective programs, value and promote different ideas.) Furthermore, I’m well aware of the suspicious attitudes writers have voiced in the past about literary theory, as if it were some kind of slight of hand meant to distract, as if it undermines their authority as authors. (It does, and it should.) But for me, my undergrad experience has made my discovery and investigations of texts far more enriching, far more worthwhile–not to mention enhanced the thought I put into my own fiction. One text could, through the power of criticism, be seen through a multitude of lenses. We can see The Great Gatsby as a New Critic, a Freudian, a Feminist, a Structuralist, a New Historian, a Post-Colonialist, and one of my particular favorites, a Deconstructionist. However, I never felt comfortable identifying as any one type of critic. There were, after all, enormous benefits to each approach–as well as drawbacks. None, as I saw it, were perfect. Often, I would use the approach that I thought best fit that text. But my question is why these critical discourses are just that: discourses and not a discourse. Why can I not take these varying ideas and make them work together, rather than compete for authority?

And that’s exactly what I plan to do now.

The basis for any modern critical discipline has come from many of the tenets of New Criticism, in particular, the intentional fallacy and close reading. Just about every other critical approach relies on these two ideas. One, as stated in “The Intentional Fallacy” by Wimssatt and Monroe and later essentially expanded upon by Barthes as “the death of the author,” the author does not determine meaning. He or she has no greater claim on a text than anyone else. The author does not assign his or her own meaning and value. Two, the practice of close reading is meant to scrutinize each word, each mark of punctuation, every line break, every enjambment, every metaphor and simile, in order to determine how those choices determine the text’s meaning.

However, New Criticism does have its flaws.

It aims to discover the best interpretation. This, I would argue, is a mistake not only because it assumes there is one best interpretation but because that best interpretation focuses on what the text is actually about. This is where I start to differ with most critical theorists. I say that a text already has a specific meaning, that it is about that one specific idea (or specific ideas). That theme or meaning is fixed. Everything else about it is a quibble, because the rhetoric is clearly aimed at that goal, if it is a good, well-constructed text. We must recognize that at first. The other problem with New Criticism is its emphasis on “the text itself.” It dismisses valuable concepts in the rhetorical traingle like context, author, and audience for intent. Many of these points of the triangle have, thankfully, been reinstated and emphasized through the many different schools.

But, as I’ve said before, each new school of criticism only seems to acknowledge just one part of the triangle–except for Deconstruction which, at its worst, throws up its arms in an act of literary nihilism and says that meaning is “undecidable.” What would it look like if it acknowledged all parts of the rhetorical triangle, not just one? What if we married the princples of literary analysis and rhetorical analysis?

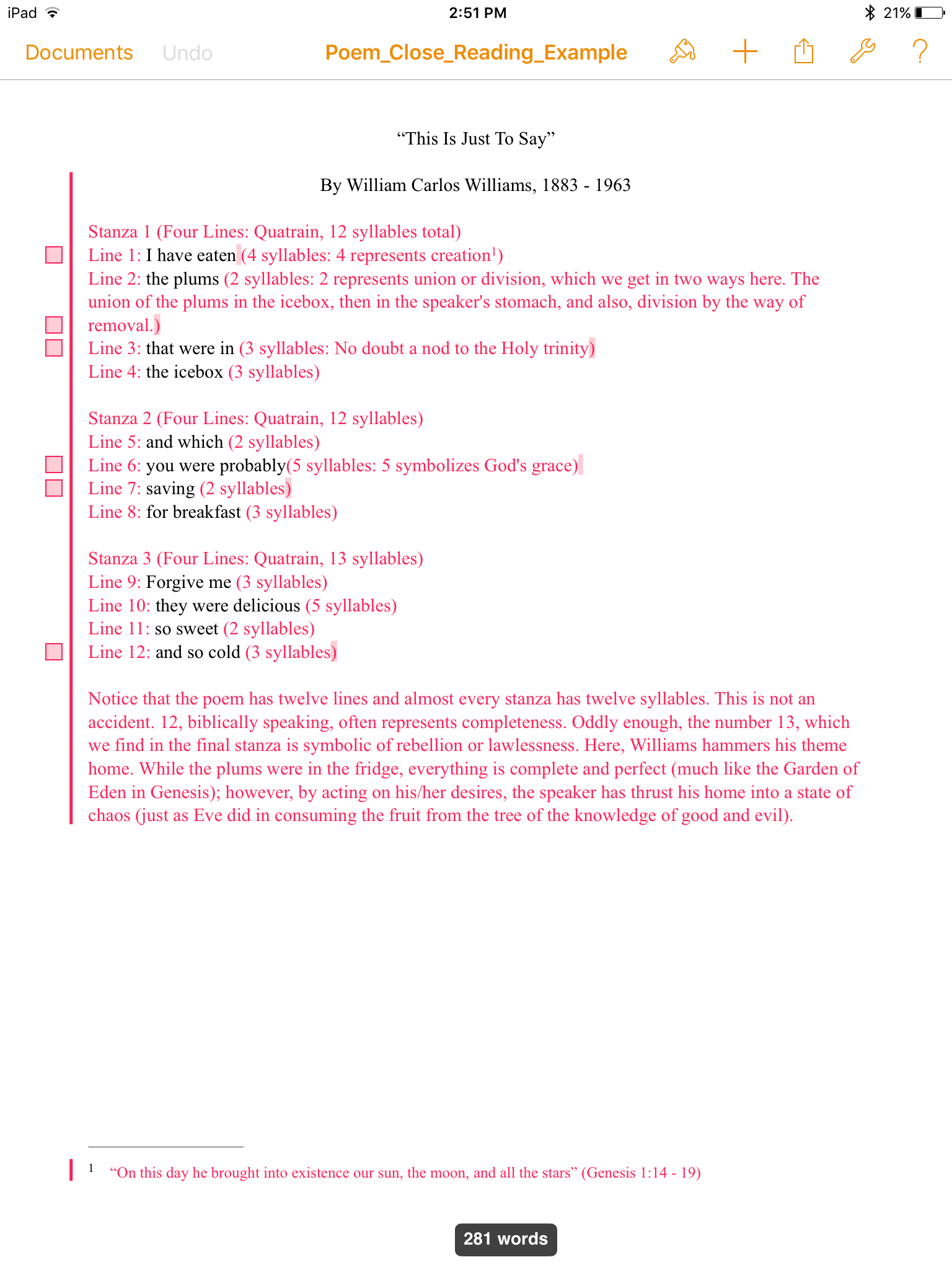

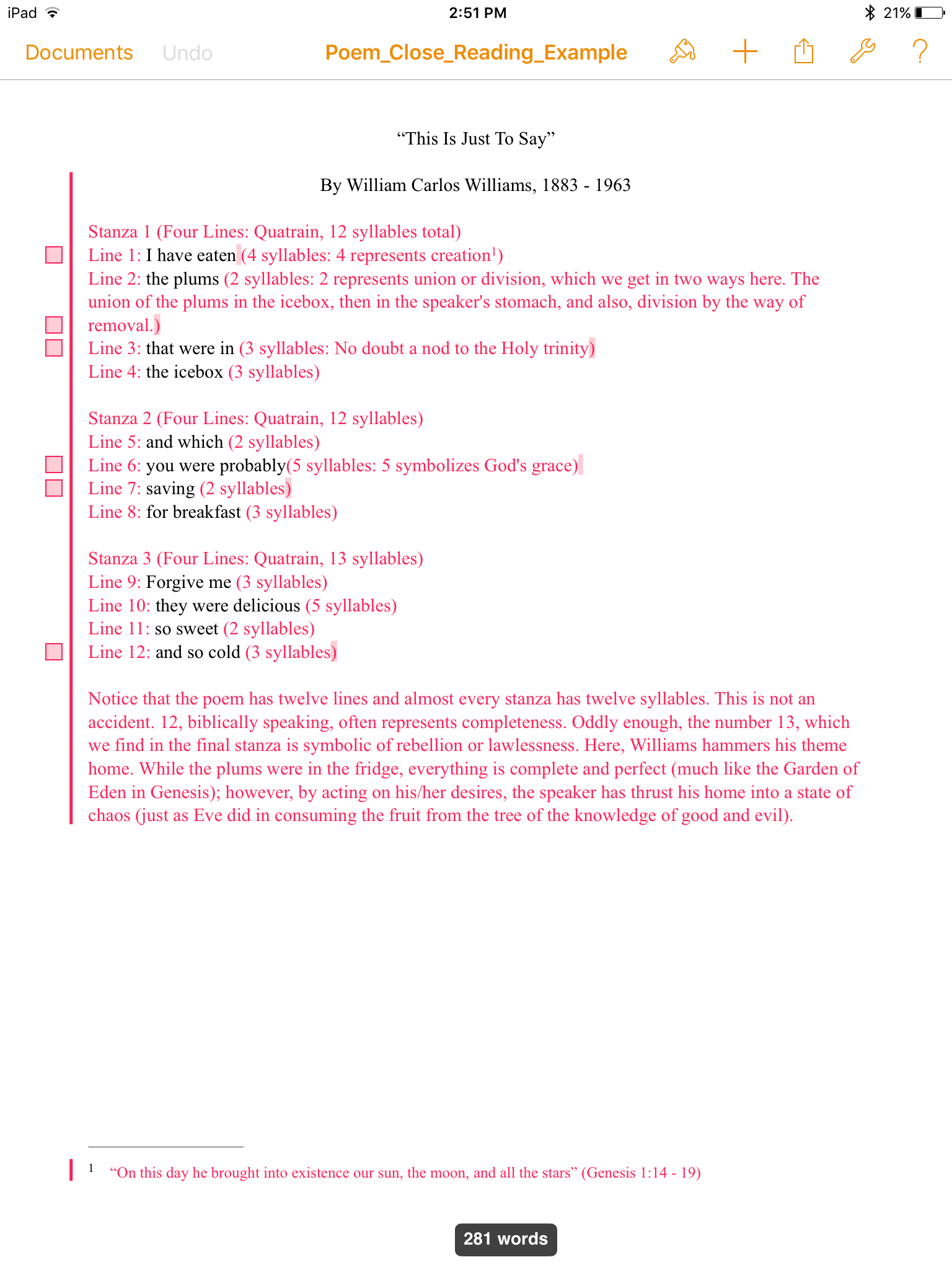

Let’s say we have a text, maybe “This is Just to Say” by William Carlos Williams. The first step should be to do an actual close reading, like this:

Post-It Note 1 (Line 1): This first line tells us a lot. First, we know that this is past tense, since it is “have eaten.” Second, this line is enjambed (it ends without punctuation in the middle of a sentence.). This suggests hesitation on the part of the speaker—the “I” of the poem. There is a clear sense of conflict in the speaker.

Post-It Note 2 (Line 2): Again, we have another enjambed line, putting special emphasis on the fruit itself. This choice seems very deliberate. The eating of forbidden fruit is a popular theme throughout Western literature (think Adam and Eve), and the speaker may be drawing that parallel.

Post-It Note 3 (Line 3): Line three, pauses again to emphasize the fruit’s non-existence and makes us consider its prior location as well.

Post-It Note 4 (Line 6): Here, the speaker introduces the second person, the “God” of the poem, who will, hopefully, forgive him/her.

Post-It Note 5 (Line 7): Notice that the line dwells on “saving,” which follows the line that introduces the “God” of the poem. Our speaker wants to be saved.

Post-It Note 6 (Line 12): This line which ends the poem may be a reference to the medieval view of hell. It was not a place of fire and brimstone, but a cold, cold home for sinners. The thinking was that the further away from God a person was, the further he/she was from God’s light.

This close reading observes the text itself and aims to decipher its “obvious” meaning. And since this is already a pretty simple poem, we know the theme is temptation. It is the tension between yielding and aversion. The privileged term here, seen through the speaker’s indulgence, is yielding, giving in. This, the poem tells us, is the better action. Of course, it is not that simple, since as we learn at the end that the act is both “sweet” and “cold,” signifying that has brought the speaker pleasure because it is delicous and pain because it has caused him or her guilt. The text is, therefore, in conflict with itself.

But in my close reading, I have also made historical and literary connections, which are not self-evident, not explicit. So the question is why? Clearly, I have a bias. I have imbued the text with what I see in it. I am actively constructing it in reader response. So we can say I have been influenced by my cultural landscape, particularly the hegemony of Christianity, as I draw connections between what I observe and with what I associate with what I observe.

And Williams has a bias too. His consciousness has also been shaped by the same cultural hegemony, corrupted by the institutional power of the Church, for the confessional nature of the poem highlights his own anixety over partaking in such a “sin.” Foucault, in his History of Sexuality, claims, “[O}ne does not confess without the presence or virtual presence of a partner who is not simply the interlocutor but the authority who requires confession.” The poem’s speaker’s pleasure may be blissful in the moment, but afterward, it leaves him or her empty and guilt-ridden, for which the only solution is confession. Such an attuitude concerning pleasure is contrary to the speaker’s (and by extention, Williams’s) own biological impulses, trapped in a Pavlovian cycle of pleasure always followed by pain.

Lastly, we must examine the context, the time and place in which the text was composed. Published in 1934, the poem is a product of its era, when, for the decade prior, there was the unbridled hedonism of Prohibition lurking beneath the surface of “dry” America, as all kinds of sexuality was celebrated by those who engaged in the counter-culture, but with the repeal of the 18th Amendment, returned puritanical denial and self-flagellation.

This approach, even as rushed, sloppy, and simplified as it is here, is what we need more of, an observation of all the points of the triangle, one that presents a more complete, complex, and thorough portrait of meaning, which hopefully gets even more complicated when we factor in things like race, gender, class, et cetera. Some may claim this is tedious–and I think it is–but it is also necessary. Criticism is designed to be just that.

Furthermore–and this should go without saying–this approach does not stake a claim on ultimate meaning. It is grounded in Cartesian uncertainity: It recognizes the subjectivity of experience. If anything, it is a Kierkegaardian leap of faith. But the important idea is that it demonstrates how the critic got to where they are. This idea is still very much in its infancy: There is more to be developed here, more to be scrutinized, more to be theorized, more to be tested, more to be investigated, more to be written and revised. But at least, it’s a start.

September 29, 2015 / Vito Gulla / 0 Comments

I know I’m late to this party, but The New Yorker just ran a profile on Kenneth Goldsmith, which many have stayed silent on–and when they have been willing to talk, it’s been outright hostile. The National Book Critics Circle Board retweeted one person’s plea to the editor of Poetry Magazine to retweet the article, which was followed by responses like, “So the lesson here is be a lazy racist and get a lot of press?” and “trash.” One response I found particularly disheartening came from Justin Daugherty, founder of Sundog Lit and editor of Cartridge Lit, who wrote in a now deleted tweet, “KG [Kenneth Goldsmith] is a garbage human.” Now maybe Daugherty and others have had a chance to meet Goldsmith in person and found him to be “trash” and “garbage.” It’s well within the realm of possibilty, but I think, more so, Daugherty is referring to Goldsmith’s poem “The Body of Michael Brown,” which remixes the autopsy report of the young man shot by police whose death served as a catlyst for activism and riots in Ferguson, Missouri. While I think there’s an argument to be made that such an act was “too soon,” social media and the literary blogosphere took a different approach: a shitstorm of moral outrage and indignation. Most arguments against Goldsmith’s performance, from Joey de Jesus’s essay at Apogee to Flavorwire, focused on the act itself, rather than the actual text. And you’re probably wondering: Where can I read/see this? The short answer, ironically during banned books week, is you can’t. And just about none of Goldsmith’s critics have had the chance either. So how, exactly, have so many determined so much based on so little? Don’t we need a text to criticize, to scruntize, to determine whether it was racist or not, as so many have claimed? Shouldn’t analysis and interpretation be based on textual evidence? Probably what I find most distressing is that these writers and editors, who have uniformly condemned Goldsmith and his poem, should know better. They are, first and foremost, artists, and we have all seen plenty of challenges to our freedom in the past and present. But also these are men and women with MFAs and PhDs from the best schools in the country, where the faculty are active scholars who have faced the rigors of peer review, who, presumably, expect the same focused, logical, detailed, thorough analyses from their students. So how, then, have things gone so wrong?

#

In rhetoric and composition studies, we often talk about the rhetorical triangle, which is the connection between the writer, the audience, and the message. This, of course, is framed within a certain context. Most schools of literary criticism place greater value on one of these facets. The New Critic emphasizes intent. The Reader Response Critic focuses on audience. The Freudian psychoanalyzes the author. The New Historian privileges the context. None of these, I would say, are any more useful than the other. In fact, this is one criticism’s biggest flaws–especially as of late. These things, individually, don’t bring us any closer to meaning. They need to be recognized in concert with one another.

Unfortunately, things have only gotten worse.

Due to the increasing influence of identity politics, both the author and the context are all that matter. The text, it seems, has become irrelevant. We don’t care what was actually on the page. That should worry any serious artist or critic. As T. S. Eliot once wrote, “[A] critic must have a very highly developed sense of fact.” But this current trend ignores or disregards evidence which may be contrary to the critic’s conclusions, which often means the diction choices, the form, the imagery, the structure–all of it has been reduced to some irrelevant detail. Critics claim that a text is a vehicle through which our gender roles, class system, and structural inequalities are reinforced rather than challenged. When–and why the hell did this happen? To that, I don’t really have an answer, but I can say that such a trend should be unnerving to any artist, regardless of his or her politics.

First of all, what artist is a complete shill for society? I couldn’t give a fuck about how good or bad he or she may be: Artists are free-thinkers. We’re independent. We have empathy, logic, humanity. We recognize the beauty and benefits of all things–even those we hate. That’s our job. Otherwise, we’re not looking at a poem or a story or a painting, but a didactic piece of shit that our audience will find more alienating than enlightening. Isn’t it OK to be OK with some of the ways that society operates? (This is not a tacit endorsement of racism or sexism or anything of the like, but more so a way to say I don’t entirely mind free markets as long as they’re regulated pretty heavily.) Secondly, I’m not fond of the deterministic philosophy which underpins much of this approach. Whether it’s Tropes vs. Women or an article in the LA Review of Books, the assumption is that society is racist, sexist, and heterosexist, even when it’s actively fighting against it. They preach to us that it is inescapable, but they, of course, have the power to point it out. They are the Ones. They can see the Matrix that you and I can’t. Only they can see, as bell hooks puts it, “the white supremacist capitalist patriarchy.” And while I respect hooks as a rhetorican, I find her conclusion suspect. Let me say, there are absolutely inequalities that exist, problems that exist, but the suggested cause is far too simplistic, as, much like today’s literary criticism, it ignores a lot of contradictory evidence in favor of the ancedotal. Worse still, the solutions are far, far too vague (reform? revolution? change behaviors? change literature?). These issues will not be solved by preachy art: They are solved at the ballot box. (If you became a critic to “make a difference,” try running for office instead.) Art isn’t overly concerned with the right now: It uses the specific to tell us something about the universal.

#

So where do we go from here? I think we need to return to the “scientific” approach proposed by Ransom all those years ago in “Criticism Inc.“–with some caveats, of course. We have to do a thorough close reading of a text in order to discover the intent, but we also need to stress the other points of the rhetorical triangle as well. The message is important, but so is the ways a text undermines its own theme, the constant battle of binary opposites. We, too, must recognize the biases of the reader and the author and the time and circumstances under which it was produced just as we recognize the importance of “the text itself.”

#

So I’d like to leave you with a lie I tell my literature students every semester. I say: In this class, there are no wrong answers. I know that’s not true, but I say it because I don’t want them to be afraid of analysis, to fear interpretation. However, I realize there are “wrong” answers, invalid answers. You can’t just pull shit out of your ass and say it is: You must demonstrate it through a preponderance of textual evidence. This is the cardinal rule of criticism. Yet as my students’s first paper (on poetry no less) approaches, I let them in on this little distinction between valid and invalid arguments. It would appear that many of Goldsmith’s critics skipped that day of school.

September 17, 2015 / Vito Gulla / 0 Comments

I want to start off this post with a definition–two definitions or really, a distinction. Writers and critics often talk about the responsiblity of the artist, what things we should and should not do and how, and as I’ve said in the past, I’m not too fond of burdening creative people with an intent of social justice. (This is not to say art doesn’t provide moral instruction: It is more so that art presents a multitude of moral possibilities and shouldn’t serve merely as propaganda.) That tends to be a recipe for didacticism rather than enlightenment. However, I do think that art itself is meant to do one thing: It shows the world as it is, as the writer sees it. Now I know that may sound absurd, especially when we take into account the many genres available to us. How does the fantasy or sci-fi writer depict the world as it is, when the story takes place in an entirely different universe? Well, the story, no matter the species or world, should connect to those experiences we all share as human beings. There are flaws in human nature, things we don’t like about ourselves as much as there are many strengths. Neither will ever be exitinguished. Societies, even those that appear utopian, still give into our failings. That’s what makes stories worth reading. But if, instead, we depict the world as we want it to be, as we’d like it to be, we begin to tap into something else: pornography. You see, pornography, to me, is not just something that excites or titillates us, but shows us a world we’d like to inhabit. Have you ever watched a porn? Depending on your own personal fetish, you are the viewer of your own particular fantasy. Do you wish women would fawn all over you and decide to have sex with you in moments of meeting you? Do you wish your man would massage you and spend a lot of time on foreplay? Whatever it is that turns you on is there with a few clicks of the mouse. But the world doesn’t operate under those conditions. Our lives are not fantasy, and if you want to see your perfect world in art, you’re only engaging in masturbation.

#

I can’t remember the name of it, but a few years ago a book came out that depicted a homosexual love story in a very conventional way, in the sense that it was merely about two gay people in love with one another. I do not know whether the novel was very good or not, but that’s besides the point. There were two camps of people who had two very different reactions. First, there were the supporters, who felt that the novel was a good one and found it refreshing that the novel didn’t concern itself the societial struggles of the couple. It was just a love story where the characters happened to be gay. The other side thought the novel avoided the issue, that it should have been a main theme of the book. My problem is with the latter group. Who says that the characters have to face the structural oppression of their culture? Can’t they face other challeges? The story is about their love. The writer’s experience and knowledge is what dictated the focus of the narrative, not his or her political agenda. I’m sure the writer is for equality, and if he or she is gay, they are tapping into their world as it is. Does being gay change the governance of a story? Does it mean that the structure must be completely different? Do the characters have to act a certain way? We seem to want to straddle this line of categories are important/unimportant, that we are unique and also part of a group. But if our characters can’t represent that unique perspective, then doesn’t that make for fiction that is largely the same?

#

One of the best movies to come out in a while is Mad Max: Fury Road. It’s brilliant in its construction and reminded us how exciting (and visual) films can be. It’s also one of the most feminist films I’ve seen. Furiosa could have easily been one of those hard-nosed, masculined female characters, the kind that fuck but can’t connect to people. She, however, is Max’s kindred spirit: She is his equal in almost everyway. There are some things Max is better at, like killing a bunch of guys in the fog, but she’s a better shot and driver. They work together in perfect harmony. They’re both sensitive and caring, even when they know they shouldn’t be. And really, if Max wasn’t a crazy drifter, I would even think that the two of them would have been more than happy to start a physical relationship to add their emotional one. They play off one another and serve each other’s stories, helping one another to find redemption. That’s pretty rare on-screen or off.

Unfortunately, we have a lot of narratives that don’t do this–and it’s not because of institutional sexism as so many critics claim. It’s because they’re poorly written, and somehow we’ve forgotten this. (Art is really, really hard. Writing even an essay is strife with traps. It’s hard enough to convey an idea through argument and evidence. When you add story, it gets that much harder. Most of the time, people don’t know what the fuck they’re trying to say with their art.) To me, lovers in any story must be equals; otherwise, what’s the point of putting them together? Han and Leia in Empire and even Jedi are a terrific example. Once their initial courtship ends, they face challenges together. They aren’t spending their time quibbling about stupid shit. They have a mission, and it’s one they attack as a couple. Sure, they might squabble over how best to do it, but they still end up working together as a team.

One thing I find particularly frustrating, in television mostly, is when the writers wedge problems into the relationship–especially after seasons of will-they-or-won’t they. Why is it that these couples end up bickering in the subplot? Sure, relationships aren’t perfect, but why waste my time with something that shouldn’t be a problem? The goal is achieved. Turn your attention elsewhere. The next goal isn’t making things work: It’s taking on the new challenge or challenger as a team.

#

I read an article just recently that says that “Female characters often aren’t allowed to have their own story arc.” I’m not fond of this particular generalization. I can think of few good stories where this is the case. Every character wants something, and by the end of the story, that character should either succeed or fail. In Die Hard, Holly Gennaro wants to be a successful business woman, and she thinks that means she has to sacrifice her relationship with her husband. They, of course, have to put those martial problems aside in order to stop the terrorists, which they do as a team. In the end, she recognizes that they can be together, and presumably, doesn’t need to distance herself from her husband in order to be a success. In Batman Begins, Rachel is Batman’s conscience–and has her own strategy to achieve their common goal. In Iron Man, Pepper Potts primary goal is to keep Stark Industries running. The love stories aren’t tacted on: They’re integral to the story because a good writer knows they should never put something in that doesn’t serve a purpose. But there are plenty of writers who don’t recognize this, and that’s just what the author of that article is inadvertently complaining about. It’s not a gender issue. It’s quality control.

#

Critics say we need more “strong female characters” in art. First, what the hell is a strong character? When I hear it described, what it sounds like is a complete character, one who is complex and real, but people tend to take this as “women need to be badass.” I object to that as much as I do the term “strong female character” for its inaccuracy.

Just about every movie nowadays that has a squad of soliders needs to have that badass female in it. Those characters tend to be as one-dimensional as the rest of the squad. So really, what’s the point? Characters in the background are just scenery. Ripley is complete; Vasquez is just a diversity credit. It doesn’t matter what those ancillary characters are or do, really. If you want a “strong female character,” they can’t come from the background. I think we should recognize that there’s little benefit to making some tertiary or quaternary character a woman, a person of color, a homosexual. How do you characterize this minor player? How can you get your reader to know who they are in the paragraph or two in which they appear? Do we have a shorthand to solve this? We do. They’re called stereotypes.

#

So what makes a “strong” character strong? The answer should have started to reveal itself by now. A strong character is our focal center: It is the protagonist. No other character can compare to them, except maybe the antagonist or love interest or best friend. You can’t render every character as completely as you would like.

And how do we remedy this situation? Obviously, we need more characters of certain categories, but who should create them? Should we burden the predominate writing population (read as white, cis, heterosexual males) with the solution? It might seem blasphemous, but I say no. Critics quibble with any act an author makes, binding them to a strict biological essentialism. They say a writer’s female or ethnic characters are stereotypes or that they’re writing about an experience they’ve never had. (It is should be obvious, but I am not suggesting that writers cannot write outside themselves.) But the solution comes from those people of color, those women, those gays and lesbians, those transgendered individuals who bring their own unique experiences to their fiction. I don’t think it’s absurb to say that they we tend to write best what we know best. Sure, we might not know what it’s like to shoot an Arab man for no comprehensible reason or to kill a pawnbroker because we want to tap into our own little Napoleon, but we do know what it feels like to be in our own skin. That no one can take away from us. Women, I would bet, write women best. Same with any other category.

If we want more “strong female characters,” we need more strong female writers. There, fortunately, have been quite a few so far: for example, George Eliot, Jane Austen, Zora Neale Hurston, Virginia Woolf, Toni Morrison, Flannery O’Connor, Alice Walker, Alice Munro, Tillie Olsen. (Literature, for the most part, is one of the most democratizing of the narrative artforms.) But frankly, that’s not enough. It’s easy to say that women aren’t recognized enough in the arts, and to a certain extent, that’s true. But the bigger issue is that greatness doesn’t appear in small numbers. Just look at film. Name all the talented female directors. Julie Taymor, Kathryn Bigelow…and then? And neither of those two would I call great. (Though I would argue Taymor is far more talented visually, unfortunately, her films, like David Fincher’s, are only as good as their scripts.) There’s such a small pool of female talent–of any underrepresented group–that greatness among their ranks is limited, if not shown at all. We need a larger sample size.

We tend to forget that dreck is not unique to any one group, especially, under today’s microscope of social justice. There are a lot of great male writers; however, there are also a lot more bad male writers. Just look at the latest issue of New Letters. All three pieces of short fiction are written by men, and all three suck. All over America, we have these shitty–but somehow successful–artists, whether James Patterson or Michael Bay. So the question remains: Why shouldn’t those spots go to anyone else?

September 14, 2015 / Vito Gulla / 0 Comments

Last time, we discussed the first part of the writing process. This week, I want to look at a much neglected part of the process, when our work becomes no longer private, when we put it out into the world. In other words, now that you have a finished product, what do you do with it?

There is, of course, a number of options at your disposal, depending on the length, genre, and your goals for the piece. For our purposes, we’ll assume that you want to reach as many eyes as possible. (Or to put it bluntly, we aren’t considering self-publishing.) Furthermore, we’ll try to keep our approach rather broad, so whether you’ve written a poem, a short story, or a novel, you can begin to find the right home for your work.

Research

The first thing you’re going to want to do is find somewhere to submit. Unfortunately, not all publishers are of equal value. Each one is uniquely useful. Some are widely known, with a readership and reach that extends into the thousands or even millions. Others, though small in readers, may be larger in influence. This all boils down to what you hope to achieve.

First, consider its length.

Some publishers won’t even look at a piece if it doesn’t fall within their word counts. If a magazine only publishes short short stories (typically under 1000 words) and you’ve written a 20,000 word novella, that’s probably not the best choice for you, since it will surely be rejected out of hand.

Second, what genre or style is it?

If you’ve written a formalist poem, you’re best off submitting it to publishers who prefer such things. Now there are some places who aren’t so specialized, but it’s all about knowing who puts out work like yours and who doesn’t.

Third, what kind of reputation does this publisher have?

There’s no point in sending your work to a magazine no one has ever heard of. At the same time, however, you have to recognize that the more access that publisher has, the less likely they will be to take you on. Remember: Publishers recieve more submissions than they can print. But that shouldn’t stop you, of course. You just have to be aware of reality. Having a story in The New Yorker is a serious achievement in anyone’s career: It will just be really, really hard. You don’t have any control over what happens once your work is in a reader’s hands. But you won’t get anywhere if you don’t submit in the first place.

(Note: You may be wondering how to find these publications and learn about their editiorial style and so on. Sadly, the most common answer is read them. While we should all strive to read more, especially in those magazines which we aspire to one day find ourselves, it is a bit unreasonable. There are literally thousands of journals in the world today. How, exactly, can one person get familiar with all these places? Better yet, how can he or she afford it? Things are a little more hopeful once you realize this. With websites like The Review Review and Duotrope, we have databases that catalog most of the information about such publishers, which makes this first step easier than any other time in history.)

Submitting

This is probably the most frustrating, difficult, and lengthy part of the process. You have a polished piece. You know the places who want it. But how do you get them to say yes? You don’t, to put it bluntly. This is why you have as big a net as possible. You want to send out to as many magazines as you can. It’s a lot like playing the lottery. Even though your chances are absurdly high, it doesn’t hurt to buy a few more tickets.

(A Note on Queries/Submissions: This shit is often made far too complicated. The basic cover letter should be short and straightforward. Let the work speak for itself. Start by addressing the editor by name. Then describe your work in very simple terms. For example, “Please find attached my 2,000 word short story ‘Story Title.'” Finally, give a brief overview of your accomplishments: “I hold an MFA from such-and-such university. My fiction has appeared in this and that magazine.” Thank them for their time, and then peace out.)

I suggest that you organize your submissions into tiers. Start with the places who have the most clout, the ones you’d be most proud to appear in, your S-tier publications. Choose ten or twenty of them who are on the same footing. (These magazines tend to be the ones who pay a lot too.) Then choose another ten or twenty in your A-tier, then ten or twenty in B-tier, then ten or twenty in your C-tier, then another ten or twenty in your D-tier. Don’t submit to them all at the same time however: Send them out in batches. Attack one tier at a time, and once that tier is exhausted, move on to the next.

It should go with out saying, but make sure you query each magazine individually. Don’t send off some mass email. Make it as personalized as you can. Most magazines have a masthead section on their website. Address your query/submission to the person in charge or your genre (the fiction editor, the poetry editor, the non-fiction editor).

Lastly, expect to wait–a lot. Most editors won’t get back to you until three months have passed. Some, like The Threepenny Review, have an unbelievable turn around (three days), but most take their time. Why, exactly, it’s hard to say. Some just have a huge volume of submissions. Many are understaffed. Some are dicks. It’s like anything else, and there’s no one answer that fits all publications. How and why things take so long can only be examined if the magazine is unyieldingly transparent–so good luck ever finding that.

(A Note on Submission Fees (Actually, A Rant): As you send in your work, you’ll find a number of magazines who charge you to submit your work. They often rationalize this as being the same as when people mailed in hard copies. This is a stupid fucking argument. The money you paid in the past to send in a story didn’t go to the magazine: It went to the USPS. Now, three dollars, which is what most magazines charge, isn’t too much money, but it does add up, especially when you’re submitting dozens and dozens of times. The truth about submission fees is that most editors and publishers have no idea how to make their magazines profitable. They don’t even pay themselves the majority of the time. Why, then, should the writer be punished for trying to make them more successful? (There’s no magazine if there are no stories, after all.) It really is bullshit. Furthermore, most magazines that charge submission fees tend to be some of the biggest names in the business. These are often the prestigious university-run journals with storied histories. Why can’t they figure out a better way to support themselves than to exploit the very peole who want in? Shouldn’t they, of all people, know how to do this? But like most things American, it’s something people have accepted rather than do anything about. You’ll probably bite that bullet too. How you resist, how you revolt, is up to you.)

Rejection

This will be what you can expect to find in your inbox the majority of the time. It’s just the reality of the competition. There’s a whole slew of reasons why you got rejected. Often, however, the note you’ll recieve won’t say it: You’ll get a brief, form letter thanking you for your submission and a notice that your work has not been selected.

You may have sent the wrong type of story to the wrong editor. You may need to rewrite your work. The editor may have not read your work very closely. The publication may have just published a story just like yours. The editor may have bad taste. You may have bad taste.

The answers vary widely, but again, you really don’t have control over these people. You can’t make them say yes.

Of course, there are, on occassion, times when you’ll recieve something that feels like it was written by a human being instead of a computer. It’s still a no, but it should boost your confidence. These rejections are typically written because the editor believes that you are not a complete fucking idiot. They actually liked something about your work. They might even encourage you to submit something else. Take heed of whatever you find therein. This is a publication you should consider in the future. You’ve managed to develop a relationship with someone. Take advantage of it.

(A Note on Taking Rejection Gracefully: It sucks getting rejected. It sucks more when you read an issue of the magazine you submitted to and find nothing but junk in their pages. And while you should be free to discuss why it sucks, you shouldn’t make a point to call out that magazine to their face. Don’t write the editor and tell them to fuck off–unless you don’t mind burning that bridge. Recognize the difference between criticism and being a dick. For the most part, talk shit about them behind their back.)

Acceptance

This is what you’ve worked towards. All those months have finally paid off. But your work isn’t done yet. Your publisher no doubt has some kind of plan in place to promote your work. (This is typically Facebook posts, tweets, and other forms of social media.) Don’t put that, admittedly small, burden on their shoulders alone. You have friends. You know people. Tell them about it. Support your publisher. They’ve supported you–why not return the favor? And by doing so, you also help out all the other writers who have been published alongside you. Support them too. Develop connections. Network. This is your chance to get noticed.

Just try not to be annoying about it, of course.

September 9, 2015 / Vito Gulla / 0 Comments

For the next week or so, I intend to give an overview of the writing process. There is, however, a part of me that feels that such a series is slightly unnecessary, because process is individual. It is something that we learn very early on, and even though we may not give it a great deal of consideration, it’s something most of us do automatically. There are certain parts of the process we like; there other parts that we do not like. But we typically do the things that come naturally. Nonetheless, I still think there is some confusion over the process, and many young writers would benefit from at least recognizing the steps and thinking about their own a little more critically.

I also want to mention that, in academica, at least, rhetoricans have overblown the importance of the process. That’s not to say it isn’t important–it is–just that it’s not the most useful idea to systematize. Scholars tend to forget that writing is about choices and making the best choices is what makes some content more appealing than others. After all, the final step is putting the work out there–publishing. Most writing is meant to be public, to be product, and when that happens, nobody cares how many drafts you’ve written. There is no portfoilio of your progress. You are judged solely on the merit of your work. All that matters to editors and readers is that it is worth the read.

With those assumptions in mind, so begins my two part series on the writing process, from the germ to publication.

Prewriting

This is the first step, and depending who you are, you’ll either spend a ton of time here or as little as possible. Typically, such people are defined as either outliners or pantsers respectively. I doubt any one person falls firmly on one side of the schema or the other, but this is something very individual. I think it is dictated not only by the writer’s personality but the length and substance of the work.

When you post a tweet, you probably don’t spend too much time thinking about what you’re going to write; however, when you’re working on a novel, you’re probably going to give a little more time to percolate.

You see, prewriting is merely the act of thinking about what you’re going to write, developing your ideas, and coming up with a plan. Short things require less active thought because, most of the time, your brain reaches conclusions so quickly you don’t need to acknowledge them, and long works are frustrating to get through and require more critical thinking along with perservance.

But, of course, this all depends on how you like to do things.

Personally, I’ve always avoided writing outlines. I remember how, in grammar school, I would turn in papers without the required rough drafts or outlines. (I was not a good student.) My teachers would tell me I was missing out on being a better writer. In some respects, they were right; in others, they were wrong. But that’s because they couldn’t see what was going on inside my head. For me, I liked to take a lot of time to think. I think about what I’m going to write while I’m in the shower, when I’m lifting weights, or as I lay my head down for sleep. That’s my process. I come up with my plans in my head and then put them down on paper and figure everything out as I write. That’s just what works for me.

This is the point about process overall, and why I don’t think it’s as important to stress such an overview. You have to find what works for you. If like writing a formal outline, do it. If you enjoy putting together a list of ideas, do it. If you prefer to sit down at the computer and throw up on the screen, do it. There’s a whole host of prewriting activities available to you: freewriting, journaling, talking, thinking, clustering, listing, outlining. So if you haven’t figured this out by now, here’s your chance.

This step leads logically to the next.

Drafting

This is when you actually put words on paper. The tool you use doesn’t matter all that much. Some people like to use a pen, others a keyboard. But the way you do it is important. This is also probably the most difficult to explain. How can you talk about something you just do?

When we draft, we’re enacting some kind of plan. We’re always not consciously aware of that plan, of course. It’s part instinct, part intellect. We’re making choices based on our purpose, our audience, our context. Most of all, this is when we listen to our own minds and express those thoughts as truly as we can, when we transcribe the chaos of our consciousness. This chaos is unfortunately somewhat unpalatable, which is why we take the next step.

Revision

The writer who understands the act of revision, of re-seeing their text, is typically the writer of superior prose. It is not simply fixing the commas and crossing your Ts however. (Those are lower order concerns.) Revision requires rethinking, which is what makes it distinct from editing. We look at the whole of our text, the thrust of it, the tone of it, the organization of it–the higher order concerns–and we ask is this what we mean? Is this the best way to express it? In our drafting stage, we more or less went with instinct, letting the words flow out of us naturally: In revision, we should be ruled by intellect. We tinker, change, rewrite. We temper and rearrange. We take those questions about purpose, our audience, and our context into much greater consideration. We look at the text dispassionately, without emotion. This is the last time anyone will care what we meant vesus what we say. Afterward, it becomes product, written in stone, something to analyzed and scruntinized, something over which we have no control. That’s why this step is so vital. We should recognize that people will take away something different. They will misread your work. Putting a text out into the world is the greatest example of a writer’s impotence. Hence, we should cherish this brief moment, look outside ourselves, and ask if what we have done is the best way to articulate it. If it isn’t, expect to be a prisoner of your own regret.

Editing

This is the final step in the process. This is where we check for comma splices or mispellings. It doesn’t really make a message any clearer for the most part, but it does, however, show our seriousness. By making sure that we’ve corrected our typos, we demonstrate something to our reader. We show them that we are no fool, that we are worth listening to. Unfortunately, this is the step we approach most carelessly, myself included. But this act should be treated with a little more reverence, I think. That concern we have, that dedication, hopefully, rubs off onto our reader.

And so concludes this week’s talk on the process. I know that I don’t offer a lot of specifics here on it, because it is so difficult to pin down. This is much more about trial and error than anything else. It’s about what works for you. Of course, work has to go out at some point too, so we shouldn’t get trapped in the anxiety of our choices either. It should also go without saying that these steps aren’t independent of one another, that they happen at predetermined intervals. Some writers revise while they draft; others write to the end and then fix it. But that’s because there is no one way to do it. Process, after all, is individual.

September 6, 2015 / Vito Gulla / 0 Comments

For a while now, this blog has been promoted as a place that talks about academic issues, the nuts and bolts of writing, and the art of games. One of those, of course, has been much neglected. I might make a passing mention of a game here and there, but overall, I haven’t really taken the time to talk about it at length–which is a shame. Now, however, I intend to rectify this oversight with some musings on an approach to games criticism.

#GamerGate has been widely misunderstood as a whole, in much the same way we view any leaderless grassroots movement. It’s pretty hard to talk about something so amporphous and divergent. Some people seem to think it’s about ethics in games journalism. Others say it’s about the caustic influence of identity politics. Others say it’s an excuse for harassment. In many ways, all of these things are valid, I think, but it also demonstrates how much we like hearing ourselves talk. Whatever the movement represents to you is probably predetermined, and there’s likely no way to sway you to another opinion. Sadly, most of these topics are relegated to different spheres. The people who face harassment in and around the industry, of which there are many, shed light on a serious topic, one we should all be committed against. Of course, that doesn’t mean we should ignore ethical breaches or halt discussions about how to assess art. The people who bemoan the invasion of identity politics have legimate claims too, issues we have to consider, but it doesn’t mean they should blithely brush aside the discrimination people face. Instead of actually engaging in these peripheral conversations, most of the time, these true believers continue to preach from their megaphones and silence any dissent.

In truth, there are a lot competing ideas, an argument without focus. Is there sexism in games and the industry? Most likely. Is it annoying to read a review that epouses a philosophy and provides little analysis and cherry-picked evidence without assessing whether a game is “fun” or not? Sure. Are journalists, and this doesn’t even just apply to games journalism, a little too close to their subjects? Absolutely. These things are largely hard to disprove or refute. They just kind of are. However, much like Occupy Wall Street, I don’t think the conversation has been entirely productive. It’s a lot of self-pleasure, appealing solely to one’s own audience: mental masturbation. Frankly, that doesn’t interest me. Instead, I’d rather look at solutions to definitive problems, and since my background is dedicated to the creation and appreciation of art, it only makes sense to talk about games criticism at large.

It’d probably best to start with an admission: Most mainstream criticism, of all art, is garbage. It’s not about careful consideration, thorough analysis, and nuance. In most cases, the critics we find in newspapers and blogs–those who critique our books, our films, our video games–don’t spend a lot of time crafting their argument. They don’t mine every available piece of evidence. They don’t look at a text as whole. They don’t qualify their statements or follow through on the logic of their thinking. As Allan Bloom puts it in The Closing of The American Mind, “[T]here is no text, only interpretation.” In other words, they ignore the very thing under scrunity. They don’t care what it actually says, but instead, they care what they believe it to say. Of course, mainstream critics have deadlines and numbers to meet. It is not, after all, academia, where those things aren’t as important. What I’m trying to say is that contemporary critics react emotionally. They feel their opinions to be true.

And that, to me, is the biggest problem. If we aren’t thorough, if we don’t apply the skill of close reading, how can we really make any kind of aesthetic judgement?

Most games critics don’t seem to have a theoretical basis for their, admittedly, abitrary scores. They say the graphics are great or that the story drew them in. The truth is, I could give a fuck how it made you feel. What I want to know is why. I want evidence. This, again, isn’t entirely their fault. Now, more than ever, people flip shit if there’s even the suggestion of a spoiler, as if the fun of a piece of art is the mystery of what happens next. This, as I’ve said in the past, is a load of bullshit. It doesn’t ruin the experience: It enhances it. When we know what to look for going in, it makes appreciating the craft that much more satisfying. That does not mean that we should expect only to find what the critic has found. If anything, the real disaster would be to allow one reading to bias our own reading, but such a matter is of an individual’s own sense of discovery, their own inability to think for themselves.

So the question arises: How do we assess this artform? What criteria should we use to choose play or not play?

The answer is largely subjective, as we all have different approaches to art, but I think we can, thanks to New Criticism, lay a foundation. Every game is about something, a central tension, an idea, a philosophy its trying to express. Our job is to discover it, which we often do quite quickly. In a mere matter of moments, we can articulate the theme of just about anything. Yet if you were ask us how we had made such a realization, we would presumably say it just is. This, to me, is the first mistake. We need to actually show that theme and how it’s communicated, which means a thorough, careful reading based on a preponderance of evidence. Just as a math teacher asks us to show our work, so must we. The theme should inform every facet of the text: Its setting, its graphics, its design, its gameplay, its characters, its structure. If we talk explicitly, specifically about these elements, then we can form an argument and make our case.

To an extent, there are already a few publications who take this approach, like IGN or The Completionist. However, most reviewers who take such an approach fail to deliever compelling and in-depth evidence to their claims. Again, the text just simply is.

There are others out there, Polygon and Kotaku in particular, who have started to inject moral studies into their reviews. (Polygon’s review of Bayonetta 2 comes to mind.) As an academic, this isn’t all that egregious to me. Feminist, post-colonial, queer, and Marxist criticisms are not exactly new. However, I can understand the anger consumers feel coming into a review expecting to learn whether it’s fun or not and then hearing someone talk about gender roles and objectification. “What does it have to do with the game?” they ask. Most gamers aren’t aware of the jargon of cloistered academics. That’s not to say we shouldn’t practice these approaches or that they can’t serve as a guide for our criticism (it is very easy to marry that framework with New Criticism), but more so that we, as consumers, should know what we’re getting into.

So let’s get to the point here: Games publications should announce their critical lens. I want to know where you’re coming from. How are you looking at a game? What are your criteria? What do you privilege in your assessment? Most editors give some lip-service to these ideas, but none really ever declare a manifesto, a reason for their existence. Presumably, they assume we should learn this by reading the magazine. It’s a nice thought, but I think it’s a bit unreasonable. There’s so much to learn out there, so many opinions. Can I really read through every article you’ve published to get a feel for your publication just to decide if it’s for me? And what if the editor changes and hence their vision? That’s a lot to expect from anyone.

In short, there are two problems with games criticism as I see it. One, when dealing with a specific text, reviewers/critics fail to give specific examples to illustrate their claims and/or investigate their own criteria for what makes a game worth playing, which is why consumers resent the injection of moral studies in what they believe to be an objective analysis. Two, games publications don’t really announce their editorial vision. Though I don’t think these solutions will ever take a hold any time soon, I still hope that at least one publication comes along who adopts such practices. Maybe if such things were commonplace, we’d see a little less anger in the comments and a little more rational, intelligent debate.

August 27, 2015 / Vito Gulla / 0 Comments

It should go without saying, but now, more than ever, we have an near infinite supply of art available to us. From the comfort of our own beds, we can stream a film, play the latest Mario Bros., look at the paintings housed in the Philadelphia Art Museum, and download bestsellers straight to our e-readers. A lot of people claim that all of these different forms of media are “competing” for our attention. The novelist complains about the filmmaker who steals his audience. The filmmaker decries those who defect from the big screen to the TV screen. Even the video game creator bemoans the players who pass over her game to watch someone else enjoy it on YouTube. But this idea is fundamentally backwards. It assumes that all art is on equal footing, that the novelist and the comic book writer and the painter are somehow at odds with each other. It is true that our time is always limited, and we can’t devote ourselves to disparate artforms. However, this doesn’t mean that they compete with each other. They are different experiences with different strengths, different weaknesses. Therefore, why can’t a person play video games on a Thursday, watch a film on Friday, go to a museum on Saturday, read poetry on Sunday, and listen to music all week long? This false dicotomy only muddies the water, clouding the conversation at hand–a side-show distraction that prevents us from talking about what really matters. The real question is why should we engage with art at all.

As I’ve mentioned before, Hegel gives one of the finest definitions of art: It is the “sensous presentation of ideas.” In other words, art appeals to our sensory experiences, not solely to entertain, but to teach us about ourselves, about the lives we inhabit, about how to live. This isn’t to say that art is inherently didactic or preachy. (In fact, I would argue that is a symptom of bad art.) It’s more so a case of bringing our attention to serious questions about life. Camus makes us question modernity, morality, our own existence. Bioshock asks whether we have free will, questions about human and player agency, about Randian philosophy. The Dark Knight demonstrates the tension between choas and order and the moral costs of post-9/11 America. Even Dali, in his surrealist nightmares, asks us to question our own reality, our own self of the world. Art isn’t just about good technique or grabbing our attention or being unique: It’s a messy attempt to answer unanswerable questions.

Since this blog is dedicated to the literary arts, the question arises, “Why study literature?” Think about it for a minute. Really think about it. A video game is far more interactive than a poem. A film is better at showing imagery. Sculpture is more tactile. You can view an entire painting in an instant, but a novel may take you several hours to finish. So why the hell should I read a book? What makes it so great? What does it do better than anything else? What’s missing from the rest of those forms? A book, more than any other medium, allows us to access the mind in ways that films or painting or video games can’t. Just look at some of your favorite literary characters: Gabriel Conroy, Raskilnikov, Anna Karinina, Oscar Wao, Milkman, Oliver Twist, Holden Caufield, Nick Carraway. Now think about how many of those characters succeeded on film. Not many. So what’s missing? Why are those characters so endearing on the page but so lifeless on screen? It should be obvious. Those characters are unique to their medium, designed specifically for it. They work, and we care about them, because we have access to their interior lives just as much as their exterior lives. This isn’t to say that all great literature dives feverishly into a character’s soul, but even those minimialist authors–your Hemingways, your Carvers, your Bankses–still give us some insight into the person behind the person. Poetry, one might argue, does this even better than prose, as it cuts out the story (though it may have one) and jumps straight into the soul. No other medium can express emotion or thought like literature. Other forms may have approximations, but they can never claim that as one of their strengths. Just consider how often people complain about narration in film. (It’s visual media, after all.)

We read to discover that we are not alone in the world, that others exist whose experiences are our experiences, that we are all a little bit crazy. We might not know what it’s like to kill an old pawnbroker or to serve in World War II during the bombing of Dresden or have fallen madly in love with a man who is not our husband, but we know what it’s like to be human, to make mistakes, to want things, to hide things from ourselves, to suffer.

Seneca, the great Roman stotic philosopher, when forced to commit suicide by Emperor Nero, comforted his family, telling them, “Why cry over parts of life when the whole it calls for tears?” His words are just as sage now as they were back then. We tend to think of our momentary hardships–even our own forced suicide–as unique and without equal, that no one will ever understand what we’ve gone through. While that’s in some ways true, we have to realize that people, no matter where or when they live, have felt a similar way. Life is, as Buddhists observe, dukkha–not just suffering but impermanent. We are all in a fight against time, and literature, thankfully, freezes that one imperfect moment so we may come back to it again and again.

August 12, 2015 / Vito Gulla / 0 Comments

Author’s Note: It seems I’ve fully given in to my philosophical tendencies with today’s post, so I hope you’ll forgive me. While I typically like to keep things here focused on questions about the narrative arts, and writing in particular, I have been reading a lot of the great philosophers as of late, and I guess it was only a matter of time before I felt the need to express some of those ideas divorced from any discussion of art.

So often do we forget the many pleasures life offers us. When we listen to music, whether a rock song or a symphony, we leave it on as background noise, something to cloud the outside world so that it becomes a little less distracting, a little less distinct. We ignore the beauty of a sunrise. We read a book to find meaning rather than to appreciate the charm and magic of the prose. These are simple things, little things, all as valuable as those greater pursuits we strive toward. Yet there is a dark side to anything human. As Aristotle demonstrates in his concept of the golden mean, there are two sides to every coin, an excess and a deficiency. In today’s world, and maybe since philosophers began philosophizing, there is one virtue that seems fundamentally misunderstood: conflict. Any sign of nuance or consideration is tossed aside for unequivocal damnation, especially when it comes to violence and aggression. We act as though, through sheer willpower (and activism) alone, we can frolic in the meadows hand-in-hand, all of us, committed to a peaceful cohabitation. It is a nice ideal to strive for, a utopian paradise, one that is kinder, gentler, and better overall than the world we have and have had in any time before us. That does not mean it will ever happen. And though I’m not fond of shutting down possibilities—it is, after all, theoretically possible—it is very, very unlikely. Such ideals fail to recognize the benefits of conflict and its myriad manifestations.

It typically starts with offense, conflict’s instigator. We are all prone to take offense with some idea or another. Sometimes the offense is benign, a simple statement of preference—I’m a vegan, I’m a creationist, I’m a smoker—which causes the listener to create a conflict. This is probably a matter of projection more than anything, where the very admission makes us question our own assumptions about the way we live our lives. This type of offense mostly stems from misunderstanding more than anything else, and it would be easy to dismiss this as unworthy of our attention. I disagree, however, since such statements make us dwell on our own ideas and offer some kind of defense. This is worthwhile indeed. If we are going to believe in anything, those beliefs should hold up to scrutiny. They shouldn’t, if we believe in them strongly, crumble at the first sign of opposition.

I’ll give you an example. When I was younger, I didn’t think that prostitution should be legal. What my reasons were, I did not know, and if you asked me, I would have probably explained that it just was or some other form of circular reasoning. It wasn’t until, late one night, I saw one of those panel-driven talk shows did I realize how arbitrary my ideas were. The panel was discussing prostitution and its illegality, when one of the comic’s said that she believed that there was no reason for such a legal imposition on women’s choices. She said that if a person wanted to, they should, and that, furthermore, this would afford those people access to basic modern necessities like healthcare. I was, at first, dismayed by her comments, offended even, just because the comic stated her opinion, but when I listened to her reasoning, I quickly changed my position. Her reasoning, to me, was quite sound, and I couldn’t think of any cause to dismiss her. It made me realize that my ideas where based on nothing, that they couldn’t be assumed but must be considered. The conflict here was small, but it’s effects were infinitely enriching. Conflict made me better, smarter, and most of all, more empathetic.

Now there are, of course, willful acts of conflict, times when offense is properly given, when the speaker makes an attack intending to hurt. I know I’ve done it, and I have no doubt that you have done it as well. We say things like “People who eat meat deserve to die” or “I hate such-and-such.” These statements are explicitly designed to judge, to belittle, to exert power or mastery. I would even say that these admissions are not necessarily bad, merely an expression of resentment for some slight, real or imagined. It is a reasonable thing to do, especially when you meet someone who embodies those things you hate. However, it is not necessarily conducive to a productive and stimulating dialogue. It causes the discourse to stall, as name calling begets name calling, but what happens afterward? Sometimes, we entrench ourselves further and confine ourselves to others who subscribe to our beliefs, which allows us to avoid any more conflict. This is, overall, a bad thing as an echo chamber does little to identify solutions, but I don’t think it wholly bespeaks the possibilities. We are going to find points of disagreement, always, even with those inclined to agree. We could go on shutting out others until we are as lonely as can be, alone with ourselves, trapped in our own minds. Few, if any, I would think, would allow things to get so bad. More likely, there will come that epiphany, that visionary moment, when we recognize that our ideas must be tested, that we must fight back on equal footing, not through name calling or other acts of pettiness, but through reason. We can consider those flaws that others point out. Even the pettiness helps us to get better. We set out to find answers to prove the other wrong. Sometimes, we discover that our ideas are arbitrary; other times, we learn how to fix them, to fortify them. That, I would say, is a worthwhile endeavor. We need opposition. We need to suffer. This is what allows us to grow.

But what of violence, what of aggression? How can we rationalize something so irrational? We assume that ideas can only be defended and tested by reason alone, that they must, when brought up against opposition, seek the better way. Why? We assume that everyone can be swayed to rationality, but is everyone so capable? Think about how many times you’ve been in a situation when some tough guy decides you or someone around him has caused him offense. Maybe you spilled a drink on him accidentally. Maybe you bumped into him because the crowd was so thick, and everyone was so rambunctious. Maybe he just didn’t like the way you looked at him. He invariably starts to shout or maybe even push you. What should you do? What do you do? You probably walk away. That’s the right thing to do, after all—isn’t it? You choose to diffuse the situation, robbing him of his victory. You’re the bigger man. Or are you? Aren’t you also submitting to his will? Aren’t also you letting him dictate your actions, however small they may be? Your choice to be non-confrontational is submission, and the balance of power clearly shifts in his favor. He’s allowed to continue along unchecked. But what if you strike back? What if, when he pushes you, you knock him cold? Doesn’t that have a beneficial side effect too? I think it does. He may have to reconsider his own behavior, worried that people exist that are not so quick to fold, or he may shoot up more steroids and hit the gym a little harder. Regardless, of the consequences, he is improved. And if you run into him again, he may be more apologetic, but just as possible, he may return stronger. It is those simple verbal slights, those disagreements we have everyday, made physical.

Now, this isn’t an endorsement to start bar-fights, even if in the name of self-improvement. There are, of course, other factors to consider, especially somewhere crowded: This is collateral damage. We have to recognize when and where to engage as much as what type of engagement is necessary. Of course, collateral damage is unavoidable, especially in today’s mechanized world of assault rifles and nuclear bombs. But each opportunity presented to us should be carefully scrutinized, especially when those horrific side effects can be minimized. These situations dictate our recourse and must be judged from moment to moment. But again, there is a clear benefit. The bully, the tyrant, the asshole comes in many forms, and when we engage them, we are granted a chance for reflection in the aftermath. We can recognize the flaws in our tactics, the people who we hurt by accident, the amount of force applied. Sometimes, this is enormously regrettable, horrible in fact, when we go too far, but sometimes, it is not enough, for we played things too safe and only made matters worse. And on the rarest occasions, we have weighed our options equally and met the threat with that golden mean of conflict. However, the biggest mistake we can make is to avoid the conflict altogether. If you want to change something, you have to resist. Passivity is just as bad as the barroom bully.

It is all a matter of judgement, of what tool is appropriate. Violence isn’t always the answer. That much should be obvious. But we shouldn’t take anything off the table in regards to meeting opposition. Conflict is necessary for progress, however slow and non-linear it may be. We learn through conflict how to conduct ourselves. We discover that golden mean in imposing our own will, in wielding our own power. When the American colonies revolted against Great Britain, they met force with force. When Socrates saw a man in the market claiming this or that, he struck back with reason. These conflicts make us better and worse. Every action has side effects, and it’s nice to think that logos will always win out. Unfortunately, as long as evil exists (and evil people, as rare as they may be), the pathos of violence will always have a place in our repertoire.

August 10, 2015 / Vito Gulla / 0 Comments

Lately, I have heard a lot about ideas such as cultural appropriation and cultural exchange, ideas of privilege and oppression, ideas, which, ultimately create a quandary for the artist. Of particular note, has been the increased calls for diversity, both behind the art and in the thing itself. These goals, I believe, are admirable and well within reason. It is largely benign, of neither insult nor disfigurement to American art at large; instead, it is to our shared benefit. We should have a plethora of unique and boisterous voices to admire. Good art is always worth striving for. However, there seems to be strain of these criticisms that seems ill-defined at best, an uncertainty, an inability to articulate exactly what the critic wants. The closest I have to a definitive statement comes from J. A. Micheline’s “Creating Responsibly: Comics Has A Race Problem,” where the author states: “Creating responsibly means looking at how your work may impact people with less structural power than you, looking at whether it reifies larger societal problems in its narrative contents or just by existing at all.” There are a couple of ideas here that I take issue with, ones that don’t necessarily mesh with my ideals of the artist.

Hegel wrote that “art is the sensuous presentation of ideas.” Nowhere is the suggestion that art comes attached with responsibilities. I’m not saying that art doesn’t have the power to shape ideas, but more so, there is often a carefully considered and carefully crafted idea at the heart of every work of art. That overarching theme is given precedence and whatever other points a critic discerns in a work fails to recognize the text’s core. In other words, how can we present evidence of irresponsibility of ideas that are not in play?

This, no doubt, branches out of the structuralist and post-structuralist concepts of binary opposition, and most of all, deconstruction’s emphasis on hierarchical binaries. I no doubt concede that such things exist in every text: love/hate, black/white, masculine/feminine, et cetera. However, the great flaw in this reasoning is that these binaries, even when they arise unconsciously, conclusively state a preference in the whole. Such thinking is a mistake. If we reason that such binary hierarchies exist, why should we conclude that they are unchanging and fixed? Such an idea is impossible, regardless how much or how little the artist invests in their creation. Let’s take a look at a sentence–a tweet, in fact from Roxane Gay–in order to make such generalities concrete:

I’m personally going to start wearing a lion costume when I leave my house so if I get shot, people will care.